How To Upload A Dataset File Into R

How to Upload Big Paradigm Datasets into Colab from Github, Kaggle and Local Motorcar

Learn how to upload and access large datasets on a Google Colab Jupyter notebook for preparation deep-learning models

Google Colab is a complimentary Jupyter notebook surroundings from Google whose runtime is hosted on virtual machines on the cloud.

With Colab, you lot demand not worry well-nigh your computer'southward memory capacity or Python package installations. You go free GPU and TPU runtimes, and the notebook comes pre-installed with machine and deep-learning modules such equally Scikit-learn and Tensorflow. That being said, all projects crave a dataset and if you are non using Tensorflow'due south inbuilt datasets or Colab's sample datasets, you will need to follow some elementary steps to have access to this information.

Understanding Colab'due south file system

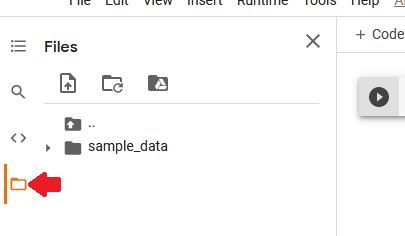

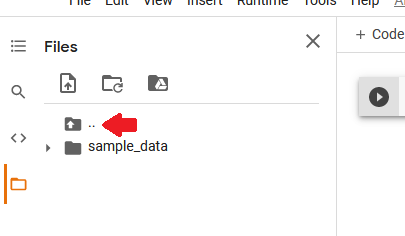

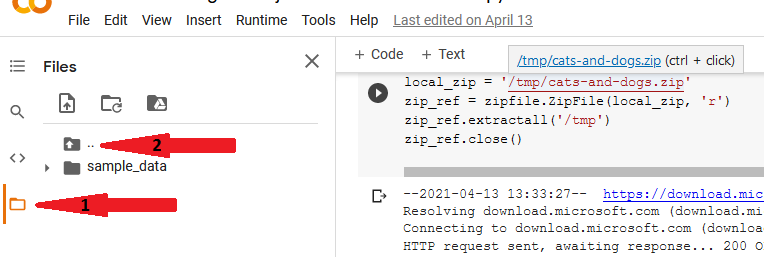

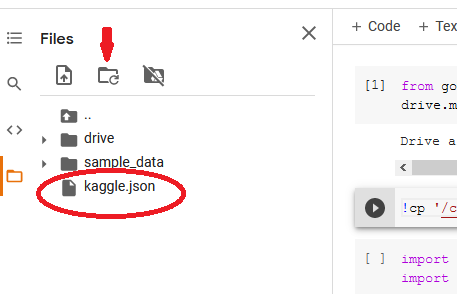

The Colab notebooks y'all create are saved in your Google drive folder. However, during runtime (when a notebook is agile) it gets assigned a Linux-based file system from the deject with either a CPU, GPU, or TPU processor, as per your preferences. You lot can view a notebook's current working directory by clicking on the folder (rectangular) icon on the left of the notebook.

Colab provides a sample-information binder with datasets that yous tin can play effectually with, or upload your ain datasets using the upload icon (next to 'search' icon). Note that once the runtime is asunder, you lose access to the virtual file system and all the uploaded information is deleted. The notebook file, however, is saved on Google drive.

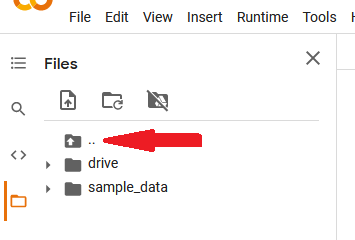

The folder icon with 2 dots in a higher place sample_data folder reveals the other core directories in Colab'south file system such as /root, /abode, and /tmp.

You tin can view your allocated deejay infinite on the bottom-left corner of the Files pane.

In this tutorial, nosotros volition explore ways to upload prototype datasets into Colab's file system from 3 mediums so they are attainable past the notebook for modeling. I chose to save the uploaded files in /tmp , just yous can also salvage them in the current working directory. Kaggle'southward Dogs vs Cats dataset will be used for sit-in.

1. Upload Data from a website such a Github

To download data from a website directly into Colab, you need a URL (a spider web-page address link) that points direct to the nada binder.

- Download from Github

Github is a platform where developers host their code and piece of work together on projects. The projects are stored in repositories, and by default, a repository is public pregnant anyone can view or download the contents into their local auto and start working on it.

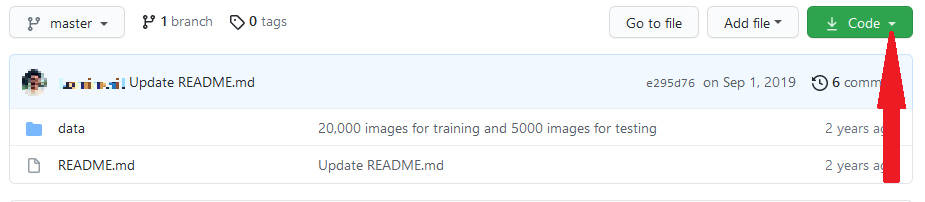

First step is to search for a repository that contains the dataset. Here is a Github repo by laxmimerit containing the Cats vs Dogs 25,000 preparation and examination images in their respective folders.

To use this process, y'all must have a free Github business relationship and be already logged in.

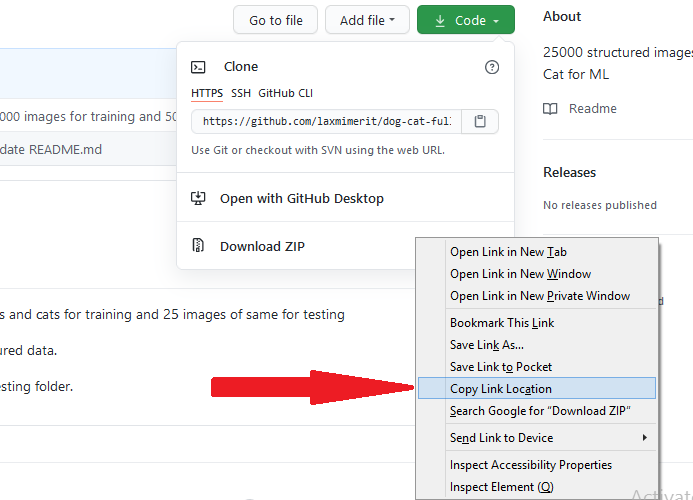

Navigate to the Github repo containing the dataset. On the repo's homepage , locate the green 'Code' button.

Click on the arrow to the right of this 'code' button. This volition reveal a driblet-down list.

Correct-click on 'download zip' and click the 'copy link location'. Note: this volition download all the files in the repo, but we will sift out unwanted files when we extract the contents on Google Colab.

Navigate to Google Colab, open a new notebook, type and run the ii lines of lawmaking below to import the inbuilt python libraries we need.

The lawmaking beneath is where we paste the link (2nd line). This entire code block downloads the file, unzips it, and extracts and the contents into the /tmp binder.

The wget Unix command: wget <link> downloads and saves spider web content into the current working directory (Detect the ! because this is a shell command meant to be executed past the organisation control-line. As well, the link must end with .zero). In the lawmaking above, we provide (optional) a download directory (/tmp), and desired name of the downloaded zip file (cats-and-dogs.nix). We use — -no-check-document to ignore SSL certificate fault which might occur on websites with an expired SSL document.

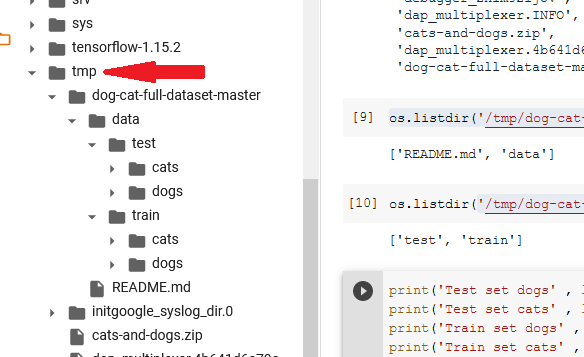

At present that our naught contents take been extracted into /tmp folder, nosotros demand the path of the dataset relative to the Jupyter notebook we are working on. As per the image below, click on folder icon (arrow ane) then file organization (2) to reveal all the folders of the virtual system.

Scroll down and locate the /tmp folder inside which all our image folders are.

Y'all tin use os.listdir(path) to render a python list of the folder contents which yous tin can salve in a variable for use in modeling. The code below shows the number of images of cats and dogs in the railroad train and test folders.

- Upload data from other websites

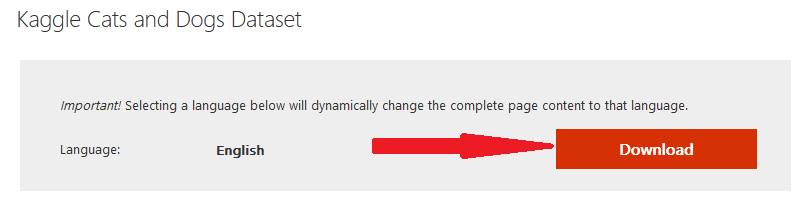

Other websites like the popular UCI repository are handy for loading datasets for car learning. A simple Google search led me to this spider web page from Microsoft where you lot can get the download link for the Dogs vs Cats dataset.

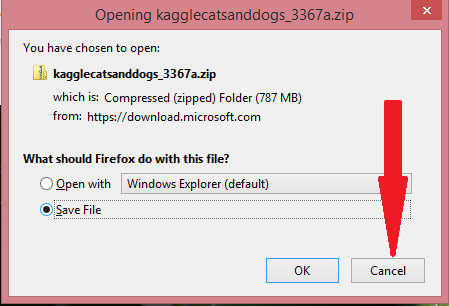

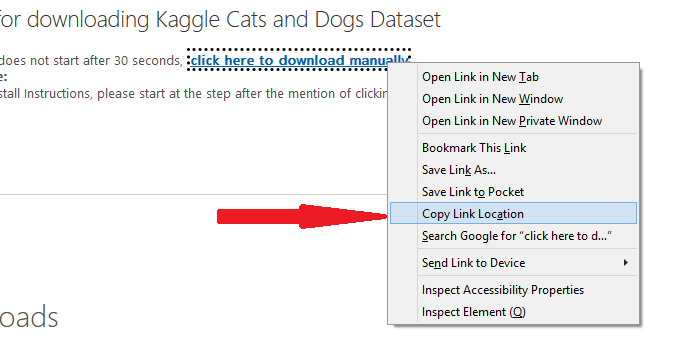

When you click on the 'Download' push button, 'Cancel' the pop-up message that asks whether to 'open' or 'save' the file.

The webpage now shows a link 'click hither to download manually'. Right click on information technology and and so click on 'Copy link location'.

Open a new Google Colab Notebook and follow the aforementioned steps described with the Github link higher up.

2. Upload Data from your local machine to Google Drive then to Colab

If the data set up is saved on your local machine, Google Colab (which runs on a separate virtual machine on the deject) will not have direct access to it. We will therefore need to showtime upload information technology into Google Bulldoze then load information technology into Colab'due south runtime when building the model.

Annotation: if the dataset is small (such as a .csv file) you lot tin upload it straight from the local machine into Colab'southward file system during runtime. However, our Image dataset is big (543 Mb) and it took me 14 minutes to upload the zip file to Google Drive. One time on the Drive, I can now open up a Colab notebook and load information technology into runtime which will take roughly a infinitesimal (demonstrated in lawmaking below). However, If you lot were to upload information technology directly to Colab's file system, assume it will take xiv minutes to upload, and once the runtime is disconnected you have to upload it again for the same amount of fourth dimension.

Before uploading the dataset into Google Drive, it is recommended that it be a single zip file (or similar annal) because the Drive has to assign individual IDs and attributes to every file which may accept a long time.

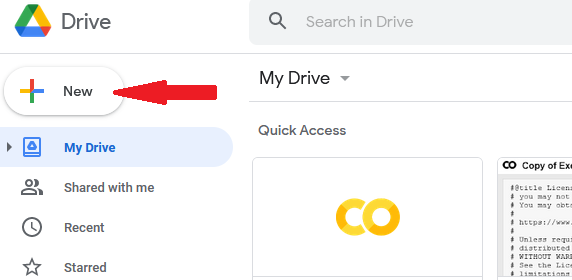

Once the dataset is saved in your local motorcar (I downloaded it from here on Kaggle), open your Google drive and click the 'New' button on the top-left. From the drop-down list, click on 'File Upload' and browse to the zip file on your organization then 'Open up' it.

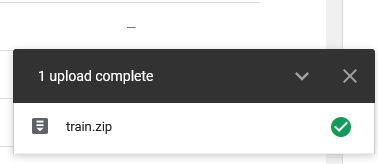

On the bottom right will appear a progress bar indicating the upload process to completion.

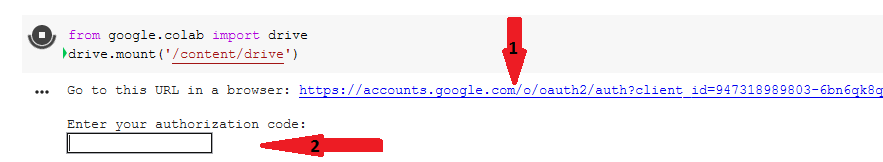

At present open a new Colab notebook. The first block of code mounts the Google bulldoze so that it'southward accessible to Colab'southward file system.

Colab will provide a Link to authorize its access to your Google account's Bulldoze.

Click on this link (arrow 1 above), cull your email address, and at the bottom click on 'Allow'. Next, copy the lawmaking from the pop-upwards and paste it in Colab's textbox (pointer 2) and finally this message will be displayed: Mounted at /content/bulldoze .

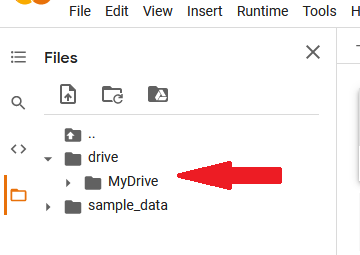

Now when we look at the files at the left of the screen, nosotros run across the mounted bulldoze.

Side by side is to read the dataset into Colab'south file system. We volition unzip the folder and extract its contents into the /tmp binder using the code below.

Now you lot can view the path to Colab's /tmp/train folder from the file system.

Use bone.listdir(path) method to return a listing of the contents in the data folder. The code below returns the size (number of images) in train binder.

three. Upload a dataset from Kaggle

Kaggle is a website where data scientists and machine learning practitioners interact, larn, compete and share code. Information technology offers a public information platform and has thousands of public datasets either from past or ongoing Kaggle competitions, or uploaded past customs members who wish to share their datasets.

The Cats vs dogs dataset was used in a machine learning contest on Kaggle in 2013. We will download this dataset directly from Kaggle into the Colab's filesystem. The following steps are essential considering you require authentication and permissions to download datasets from Kaggle.

Stride I: Download the configuration file from Kaggle

Kaggle provides a way to interact with its API. Yous need a costless Kaggle account and be signed in.

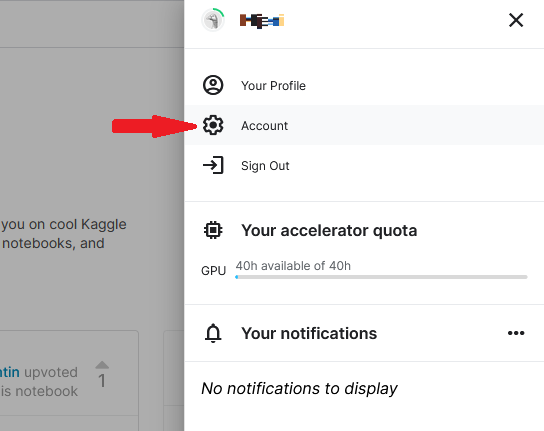

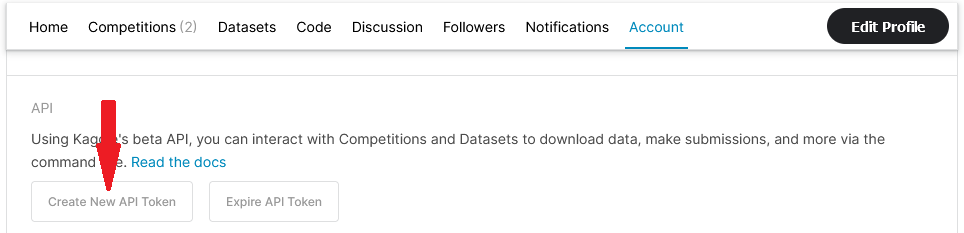

Click on your avatar on the summit right of your homepage. Choose 'Account' from the drib-down menu.

On the 'Account' page, Scroll downwards to the 'API' department and click on 'Create New API Token'.

You volition be prompted to download a file, kaggle.json. Cull to save information technology to your local machine. This is the configuration file with your credentials that y'all will utilise to straight admission Kaggle datasets from Colab.

Note: Your credentials on this file expire afterwards a few days and you will get a 401: Unauthorised error in the Colab notebook when attempting to download datasets. Delete the old kaggle.json file and come up back to this step.

Step Two: Upload the configuration file to a Colab notebook

Now with a fresh kaggle.json file, you can exit it on your local machine (step Two a), or relieve (upload) information technology on Google Bulldoze (step Two b).

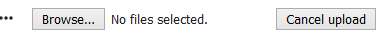

Footstep Two (a) : If the file remains on your local machine, you can upload information technology into Colab's current working directory using the upload icon, or using Colab's files.upload(). This option is simpler if yous use the same computer for your projects. If using code (non upload icon), run the code below in a new notebook, browse to the downloaded kaggle.json file, and 'Open up' it.

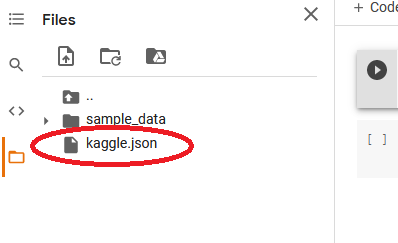

The file volition appear in your working directory.

Step Two (b) : The 2d choice is to save (upload) the kaggle.json file to Google Drive, open a new Colab notebook and follow the simple steps of mounting the Drive to Colab's virtual arrangement (demonstrated beneath). With this 2nd option, y'all can run the notebook from any machine since both Google drive and Colab are hosted on the cloud.

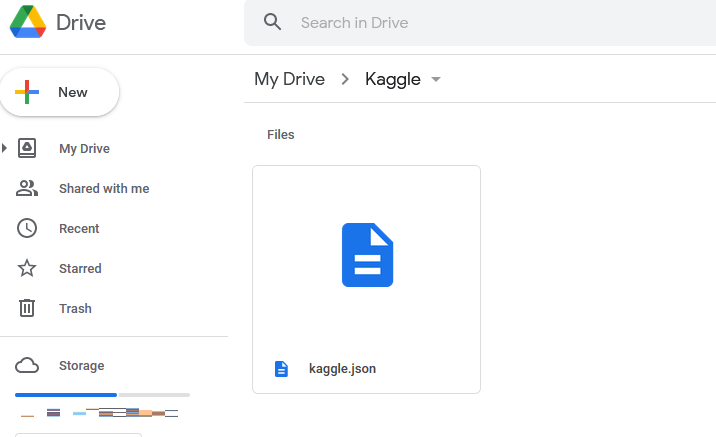

On Google Bulldoze, create a new folder and call information technology Kaggle. Open up this folder past double-clicking on it and upload kaggle.json file.

Next, open a Colab notebook and run the lawmaking below to mountain the Drive onto Colab'south file arrangement.

Once this is successful, run the lawmaking below to re-create kaggle.json from the Drive to our current working directory which is /content ( !pwd returns the current directory).

Refresh the files section and locate kaggle.json in the current directory.

Step 3: Point the os environment to the location of the config file

Now, nosotros will use the bone.environ method to prove the location of kaggle.json file that contains the hallmark details. Remember to import the files needed for this.

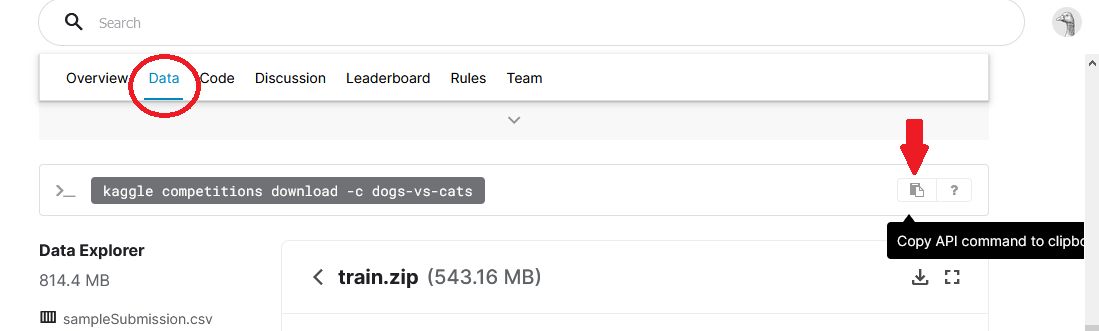

Step iv: Re-create the dataset'due south API control from Kaggle

Every dataset on Kaggle has a corresponding API command that you lot tin run on a command line to download it. Note: If the dataset is for a contest, go to that competition's page and 'read and have the rules' that enable you to download the information and make submissions, otherwise you will get a 403-forbidden mistake on Colab.

For our Dogs vs Cats dataset, open the competition page and click on the 'Data' tab. Curl down and locate the API command (highlighted in black beneath).

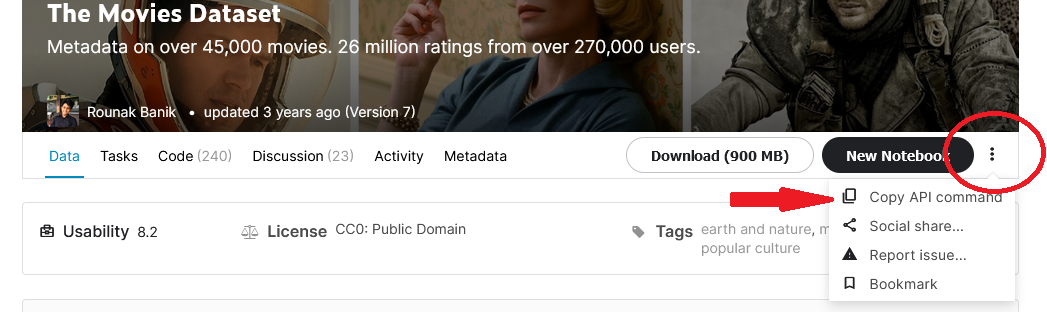

For other datasets (non competitions), click on the three dots next to the 'New notebook' button then on 'Copy API command'.

Step 5: Run this API command on Colab to download the information

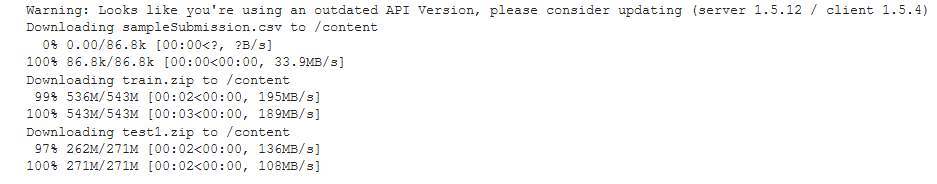

Back to our Colab notebook, paste this API command to a new block. Recollect to include the ! symbol that tells python that this is a control-line script.

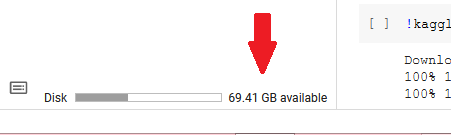

If all works well, confirmation of the download will be displayed. I got the results below.

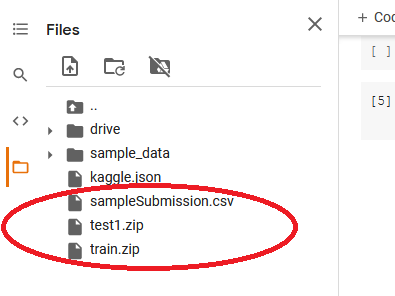

At present from the Files pane on the left, you lot tin can run across all the folders that were downloaded.

Step 6: Excerpt the files

At present we can extract the files in train.zip file and save them in /tmp (or the current directory /content).

Run the code below to return the number of files in the extracted binder.

Conclusion

In this tutorial, we explored how to upload an image dataset into Colab's file system from websites such equally Github, Kaggle, and from your local machine. Now that you have the data in storage, you tin can train a deep learning model such as CNN and attempt to correctly classify new images. For bigger prototype datasets, this article presents alternatives. Cheers for making it to the end. Wish you the best in your deep learning journey.

Source: https://towardsdatascience.com/an-informative-colab-guide-to-load-image-datasets-from-github-kaggle-and-local-machine-75cae89ffa1e

Posted by: burdettthety1995.blogspot.com

0 Response to "How To Upload A Dataset File Into R"

Post a Comment